This is the companion website to my one semester course on machine learning, co-taught with Prof. Christoph Wuersch from the NTB Buchs. The course is part of the Master of Science in Engineering (MSE) program in computer science and data science, offered collaboratively by the engineering faculties of the Swiss universities of applied sciences. This website reflects the status quo as of spring term 2019. You can find the underlying didactic concept here (in German).

TOC

Syllabus

All links lead to documents as of spring term 2019.

Additional material [optional]:

- Deep Learning in the wild (video in English) as a 60 minutes long summary of our lessons learned in applied deep learning research in industry and business

- Was kann KI leisten? (video in German) as a 90 minutes long summary of this one-semester course

Resources

Current slides, lab descriptions, terms & conditions: see Moodle (you need a valid account as an inscribed student).

Video recordings: see YouTube playlist for spring 2019.

Audio-only recordings: see Collecture for spring 2018 recordings (you need to create a free account).

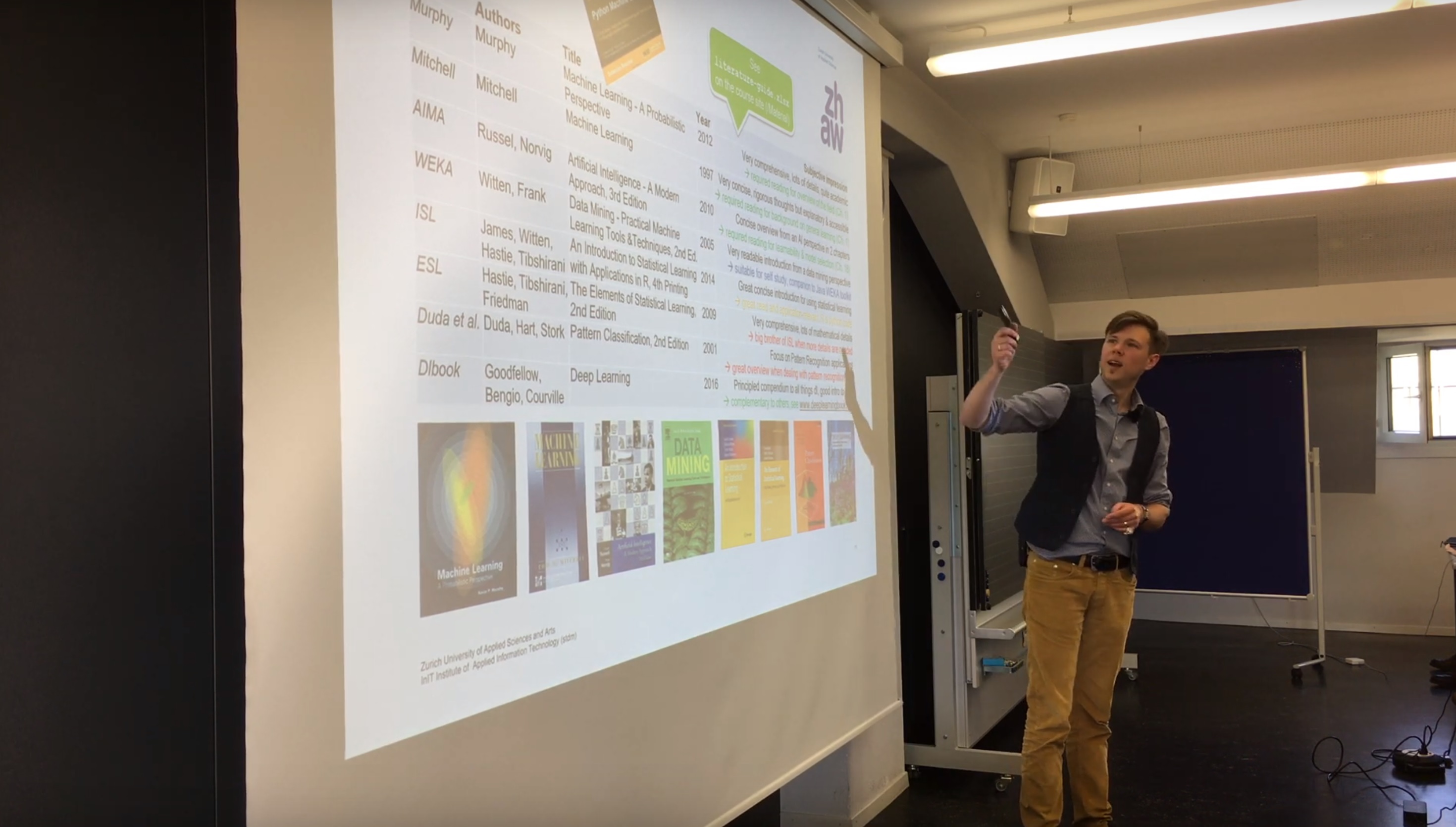

Books:

-

T. Mitchell, “Machine Learning”, 1997

-

C. M. Bishop, “Pattern Recognition and Machine Learning”, 2006

-

G. James et al., “An Introduction to Statistical Learning”, 2014

-

K. P. Murphy, “Machine Learning - A Probabilistic Perspective”, 2012

-

S. Raschka, “Python Machine Learning”, 2017 (2nd Edition)

-

further literature list

Educational objectives

-

Students know the background and taxonomy of machine learning methods.

-

On this basis, they formulate given problems as learning tasks and select a proper learning method.

-

Students are able to convert a data set into a proper feature set fitting for a task at hand.

-

They evaluate the chosen approach in a structured way using proper design of experiment.

-

Students know how to select models, and “debug” features and learning algorithms if results do not fit expectations.

-

Students are able to leverage on the evaluation framework to tune the parameters of a given system and optimize its performances.

-

Students have seen examples of different data sources / problem types and are able to acquire additional expert knowledge from the scientific literature.

Machine learning (ML) emerged out of artificial intelligence and computer science as the academic discipline concerned with “giving computers the ability to learn without being explicitly programmed” (A. Samuel, 1959). Today, it is the methodological driver behind the mega-trends of big data and data science. ML experts are highly sought after in industry and academia alike.

This course builds upon basic knowledge in math, programming and analytics/statistics as is typically gained in respective undergraduate courses of diverse engineering disciplines. From there, it teaches the foundations of modern machine learning techniques in a way that focuses on practical applicability to real-world problems. The complete process of building a learning system is considered:

-

formulating the task at hand as a learning problem;

-

extracting useful features from the available data;

-

choosing and parameterizing a suitable learning algorithm.

Covered topics include cross-cutting concerns like ML system design and debugging (how to get intuition into learned models and results) as well as feature engineering; covered algorithms include (amongst others) Support Vector Machines (SVM) and ensemble methods.

Prerequisites

Math: basic calculus / linear algebra / probability calculus (e.g., derivatives, matrix multiplication, normal distribution, Bayes’ theorem)

Statistics: basic descriptive statistics (e.g., mean, variance, co-variance, histograms, box plots)

Programming: good command of any structured programming language (e.g., Python, Matlab, R, Java, C, C++)

Analytics: basic data analysis methods (data pre-processing, decision trees, k-means clustering, linear & logistic regression)