Review of SGAICO Annual Assembly & Workshop, EPFL Biotech Campus, Geneva, 12.11.2015

The Swiss AI community gathered in Geneva this week. Fog on the verge of drizzle couldn’t becloud what was going to be an insipring meeting in good company under the impressive roof of EPFL’s Biotech Campus.

I am going to share a few random notes. The complete programm, abstracts, slides and posters can be found on the SGAICO website.

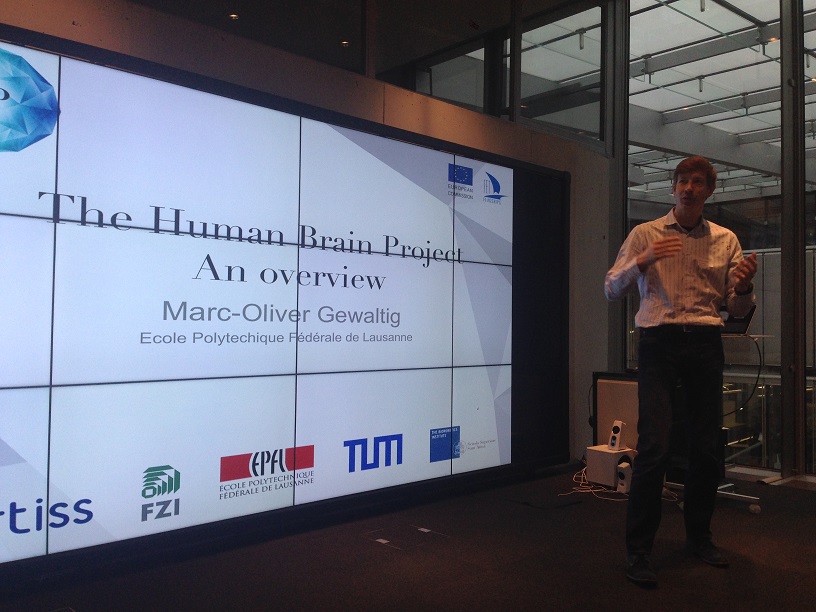

The conference started with an introduction to the Human Brain Project, which also hosted the event. Marc-Oliver Gewaltig took us into a journey of this giant EU flagship project of nearly 1 billion Euro total budget (half of it coming from the EU).

For me, most interesting was this probably older quote in his presentation: “The value of a model is not in what it explains, but in what it doesn’t explain”. This is because what it explains is the knowledge you put in while designing the model, so the rest (things that cannot be explained) is what you are actually interested in to learn.

“Big data” is also a topic in the HBP, as most of the project is about collecting more data, then turning it into a model (computer simulations of brain parts). In the end, robotics where also touched, with this very interesting example that indicates what the brain is really for: The sea sponge lives the first halve of its life as an animal, moving around in the sea. Then, it turns into a kind of “plant”, builds roots into the ocean bed and becomes immobile. Then, it digests its own brain because “it is not needed anymore”. Marc-Oliver drew the conclusion that “the nervous system is literally there to move us around”.

Herve Bourlard, director at the IDIAP research institute, gave the second talk, exposing us to the always important theme of how to raise money for good research. Drawing from the lessons learned of the ROCKIT project on conversational interaction technologies and possible successors, he showed how a roadmap for developing such technologies could look like – technically, and in terms of project management / fund raising.

Interesting to me was his statement on data privacy in the big data age: We (as individuals in a society) could use the same technology that exploits private data (machine learning etc.) to be always informed if anyone (and who) is using my private data. This would equalize the odds somehow: If a company accesses my health data, I would get informed. This in turn makes companies (or whoever, for that matter) think twice because everything is transparent. Interesting idea, challenging to apply.

Renauld Richardet then presented results from his Ph.D. thesis concerning NLP for neuroscientists: When neuroscientists want to model connections between different brain regions, they have to screen the scientific literature on this topic where such connections are established and published. The relevant literature is published on PubMed at a frequency of ca. one article per minute, so exhaustive manual screening is impossible.

Renauld applied information retrieval techniques to this body of literature that helped bringing down the time for manual literature review of a neuroscientist from one week to two hours. His approaches to named entity recognition, topic modeling and agile text mining are also applicable in other domains.

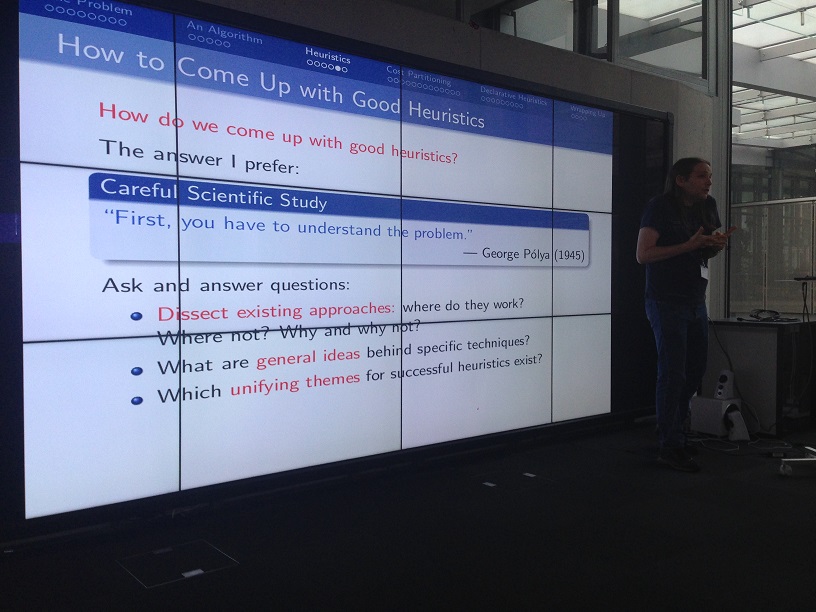

Malte Helmert from Uni Basel reopened after lunch with a fantastic introduction to the classic AI subfield of planning via heuristic search. Drawing the arch from general questions (“what is a good heuristic?”, “how can we generalize by reasoning?”) to recent research, he gave a very inspiring overview of his field and ongoing work.

Being a teacher myself, I liked most the reference to the book by George Pòlya that answers such questions (originally for math teachers) with regard to helping students to learn this art of reasoning (see the slide on the picture).

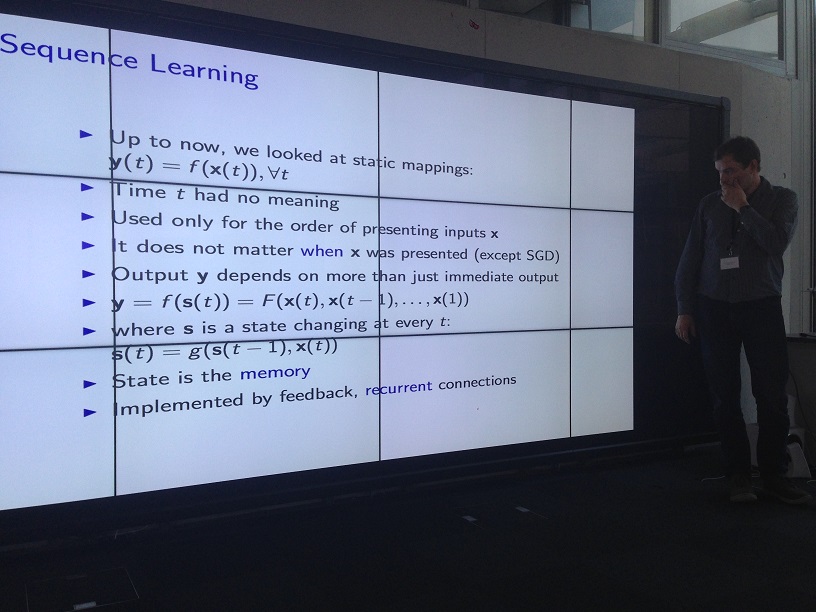

Having the last speaking slot, Jan Koutnik from IDSIA gave us a very good introduction to Recurrent Neural Networks (RNN) as trainable general computers (RNNs are Turing complete) and optimal tools for sequence learning.

An interesting discussion set off when Jan came to the holy grail of machine learning, Reinforcement Learning (RL). Current deep learning techniques (CNNs, RNNs etc.) are very good models for perceiving sensory input in a way that wasn’t possible 5 years ago, but AI happens only when these perceptions are used to control an entity (a robot, a simulation, …) which in turn changes the environment and leads to new perceptions etc. The discussion was if such RL controllers could be learned at all, using the fate of turkeys on thanksgiving as an illustrative example:

If the turkey learns bei RL how to survive, he will anyway die at thanksgiving because this event is only perceived once in a lifetime. My thought was: Humans have overcome this “learning singularity” by leveraging on the experience of others by educating themselves (e.g., by writing down experiences and reading them). Our (reinforcement) learning approaches need not only learn from the actions of one single agent, but from the whole population (of course this is what happens e.g. in MarI/O, where the Mario figure dies a thousand deaths before a speedrun is properly learned).

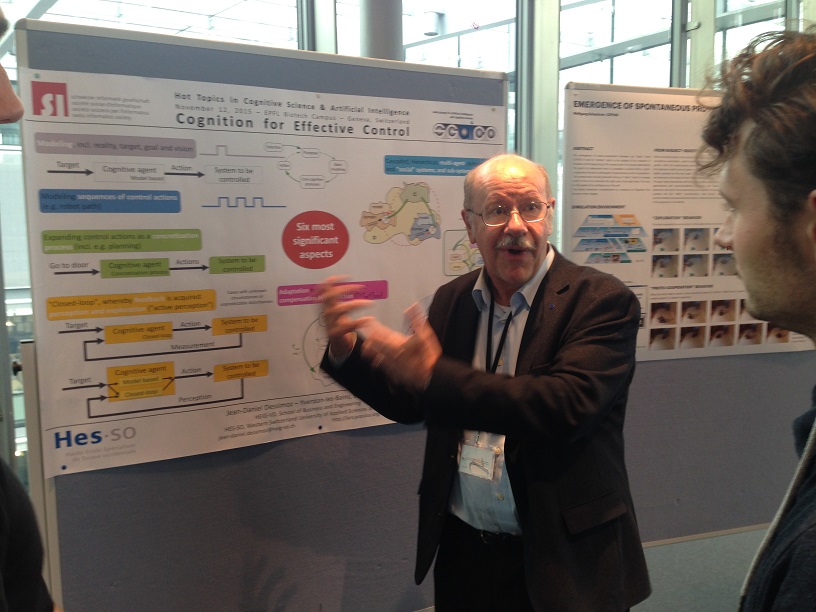

The conference finished with a poster session of lively participation and plenty of discussions. For me, the SGAICO Annual Assembly and Workshop 2015 has been a very good investment of time: Very intersting and high-quality talks on diverse topics in AI that already spun off some ideas; plenty of time to talk to the experts in a familiar athmosphere; the chance to discuss some own ideas and strengthen relationships to colleagues on several levels, from students to heads of institutes.